Think like a brain!

Neuromorphic Computing is an integrative area of study that benefits from collaboration between Neuroscience and Engineering. This journal club aims to facilitate this collaboration by providing a semi-formal environment to share research and ideas with peers. Subscribe to our email list to be notified of upcoming meetings, or use one of the links to the right to add an event to your calendar.

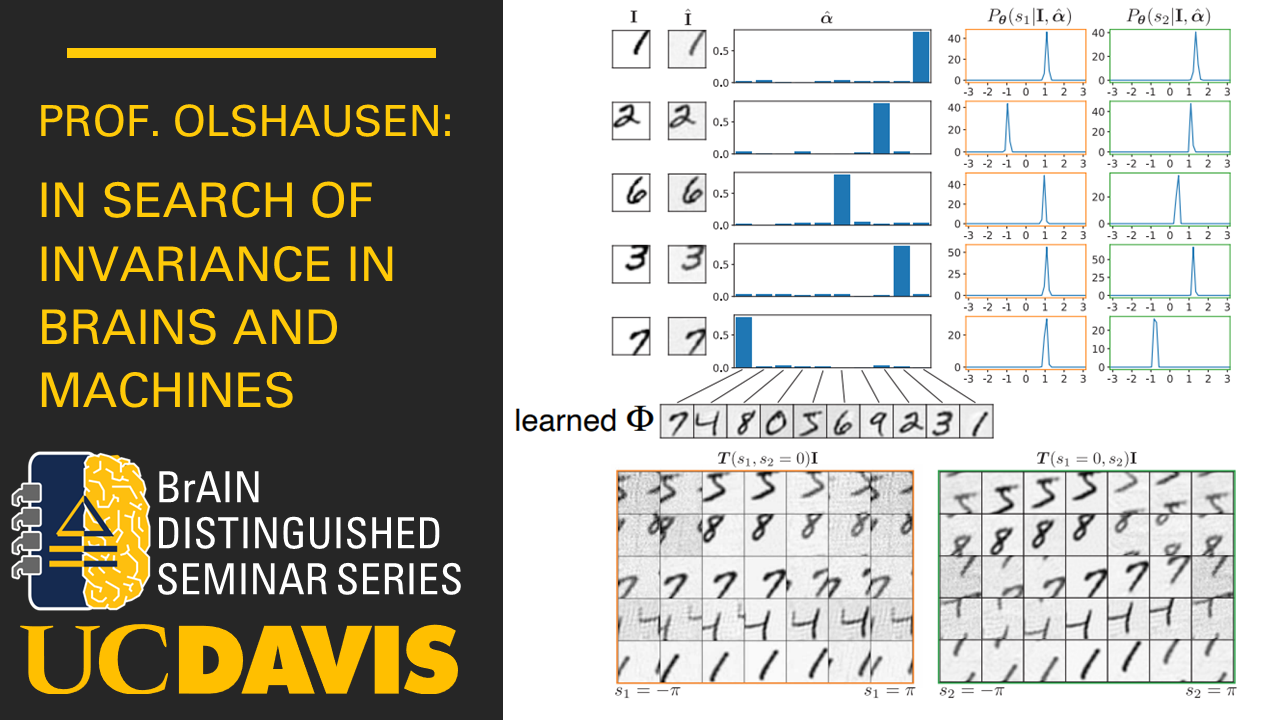

In Search of Invariance in Brains and Machines

By: Prof. Olshausen

Abstract: Despite their seemingly impressive performance at image recognition and other perceptual tasks, deep convolutional neural networks are prone to be easily fooled, sensitive to adversarial attack, and have trouble generalizing to data outside the training domain that arise from everyday interactions with the real world. The premise of this talk is that these shortcomings stem from the lack of an appropriate mathematical framework for posing the problems at the core of deep learning - in particular, modeling hierarchical structure, and the ability to describe transformations, such as variations in pose, that occur when viewing objects in the real world. Here I will describe an approach that draws from a well-developed branch of mathematics for representing and computing these transformations: Lie theory. In particular, I shall describe a method for learning shapes and their transformations from images in an unsupervised manner using Lie Group Sparse Coding. Additionally, I will show how the generalized bispectrum can potentially be used to learn invariant representations that are complete and impossible to fool.

Functional self-reconfiguration processes in neuronal networks

By Christoph Kirst

Abstract: Flexible function is essential for the brain to cope with varying environments, changing quality of sensory information as well as context dependency. This requires organized communication and flexible information processing among the different sub-circuits in neuronal networks. While network oscillations and in particular coherent dynamics between different sub-networks have been shown to change correlation structures, precise mechanisms for the self-regulated processing of information in brains are, however, not well understood.

A Probabilistic Future for Neuromorphic Computing

By Dr. Aimone

Abstract: As Moore’s Law comes to a close, new innovative approaches to microelectronics research are important to achieve much needed capabilities improvements in computing for both artificial intelligence and modeling and simulation applications. In this talk, I will introduce the concept of probabilistic neuromorphic computing, a new approach to microelectronics and computing that takes advantage of the natural stochasticity of physical devices as a source of randomness for advanced computing applications. Our project, COINFLIPS (short for Co-designed Improved Neural Foundations Leveraging Inherent Physics Stochasticity), focuses on developing new probabilistic computing technologies that bridge across materials science, device physics, computer architecture, and theoretical algorithms research. This research aims to have long-term impacts on uncertainty-aware artificial intelligence and probabilistic modeling and simulation capabilities.

slides: COINFLIPS_UCDavis.pdf

Reverse Engineering the Cognitive Brain in Silicon and Memristive Neuromorphic System

By Prof. Cauwenberghs

Abstract: Prof. Cauwenberghs presents neuromorphic cognitive computing systems-on-chip implemented in custom silicon compute-in-memory neural and memristive synaptic crossbar array architectures that combine the efficiency of local interconnects with flexibility and sparsity in global interconnects, and that realize a wide class of deeply layered and recurrent neural network topologies with embedded local plasticity for on-line learning, at a fraction of the computational and energy cost of implementation on CPU and GPGPU platforms. Co-optimization across the abstraction layers of hardware and algorithms leverage inherent stochasticity in the physics of synaptic memory devices and neural interface circuits with plasticity in reconfigurable massively parallel architecture towards high system-level accuracy, resilience, and efficiency. Adiabatic energy recycling in charge-mode crossbar arrays permit extreme scaling in energy efficiency, approaching that of synaptic transmission in the mammalian brain.

slides: Reverse Engineering the Cognitive Brain in Silicon and Memristive Neuromorphic Systems_0.pdf